LMArena apologised after Meta used experimental version of Llama 4 to achieve a high scores, performance of Llama 4 models haven’t improved yet

-

LMArena had to apologize to developers after it was established that Meta used an experimental version of Llama 4 to achieve a high scores.

“Meta’s interpretation of our policy did not match what we expect from model providers. Meta should have made it clearer that “Llama-4-Maverick-03-26-Experimental” was a customized model to optimize for human preference. As a result of that we are updating our leaderboard policies to reinforce our commitment to fair, reproducible evaluations so this confusion doesn’t occur in the future,” LMArena wrote in X.

-

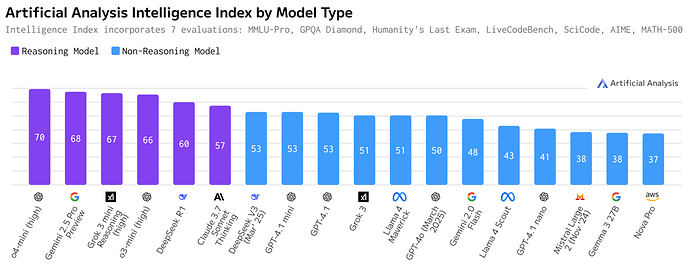

Llama 4 maverick currently ranks below popular models such as GPT-4o, Deepsek V3, Claude 3.5 Sonnet, and Google’s Gemini 1.5 Pro.

-

Current rankings by Artificial Analysis also puts Lama 4 Maverick in the fifth position among non-reasoning models.

Assessment

It’s evident that the performance of Llama 4 models hasn’t improved in the past two weeks, despite Meta’s head of Gen AI promising that the company was working to fix the bugs causing quality issues. LMArena’s confirmation that Meta used an experimental version is also not good for the company’s credibility.